Development Processes in Your Business – Part 1

I have the privilege of working closely with several of our software vendors to help them improve their products, both for our use and for their entire client base. Through this collaboration, I’ve discovered some fascinating correlations between software development processes and business development processes. These similarities have significantly influenced my perspective on development across various areas.

In this post, I will delve into the history of the two primary development processes and discuss their pros and cons. In part two, I will explore how these different processes manifest in business.

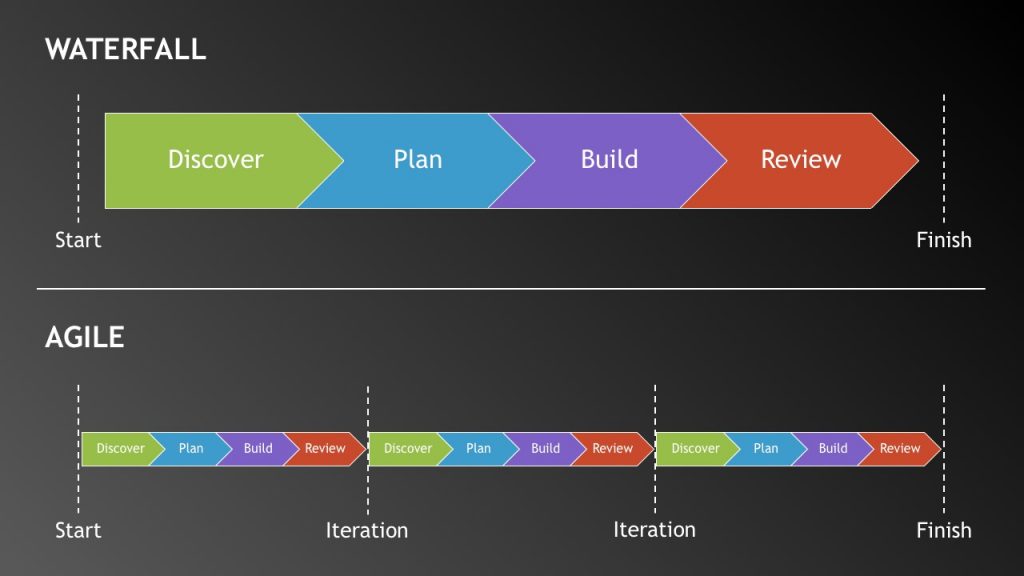

Let’s start with a bit of history. Traditionally, software was developed using what is known as the waterfall process. This approach is still in use today, albeit less commonly. From the user’s perspective, this method resulted in software releases occurring annually, every three years, or on a similar schedule. Developers would begin by drafting a list of changes, scoping them out, and placing them on a roadmap. They would then work on implementing these changes. As the release date approached, pressure would mount to finalize the updates. This sometimes led to releasing unfinished features, delaying the release, or postponing certain changes until the next release cycle, which could be one to three years later. One of the main reasons for this approach was the significant effort required to package, distribute, and support each release.

As Internet connectivity has improved, cloud environments have become more prevalent. One of the advantages of cloud environments (though there are disadvantages as well) is that developers don’t usually have to worry about thousands of deployed environments for their software. They typically only need to manage one or two environments. Additionally, developers usually have complete control over the environment, a freedom they don’t have when the software is deployed at a client’s site, on a client’s network, or on a client’s servers.

While the cloud isn’t the only factor that initiated this shift, it created a much more feasible environment for a different development process called agile. Agile development, at its core, is exactly what it says it is—agile. This methodology takes proposed changes and breaks them into bite-sized pieces. A team then focuses on developing, testing, and deploying each piece in what is often called a sprint. Depending on the complexity of the change, this usually happens in days, weeks, or months, rather than the waterfall’s timeline of months and years.

In the waterfall process, much care is usually given to ensure that any change is done right from the start, as it may be years before it can be changed again. In the agile process, changes that didn’t hit the mark can usually be rapidly adjusted. This allows agile developers the freedom to quickly innovate and deploy new features and functions to end users. Because the changes in agile development are typically smaller, the bugs tend to be smaller and easier to find and fix. Even if bugs get through, agile development allows for faster recovery from failures due to the rapid deployment of patches.

As with anything, there are pros and cons to each method. Waterfall development still has its legitimate place, especially for more significant changes that have far-reaching impacts and justify the time investment to more fully vet them out. Agile development provides for more rapid innovation that gets new features into production faster and allows you to rapidly iterate on those features, but it also results in more interruption to the end user as the product is constantly changing.

Stay tuned for part two, where I will discuss how these development processes translate into business practices and the pros and cons of using them in your business.

Tags

Let’s Connect

We would love to hear from you. If you have questions about how we may be able to work together, click the button below, fill out the form and we will connect to explore the future together.